Manual Chapter :

Configuring Layer 3 nPath Routing

Applies To:

Show Versions

BIG-IP APM

- 21.0.0, 17.5.1, 17.5.0, 17.1.3, 17.1.2, 17.1.1, 17.1.0, 17.0.0

BIG-IP Analytics

- 21.0.0, 17.5.1, 17.5.0, 17.1.3, 17.1.2, 17.1.1, 17.1.0, 17.0.0

BIG-IP Link Controller

- 21.0.0, 17.5.1, 17.5.0, 17.1.3, 17.1.2, 17.1.1, 17.1.0, 17.0.0

BIG-IP LTM

- 21.0.0, 17.5.1, 17.5.0, 17.1.3, 17.1.2, 17.1.1, 17.1.0, 17.0.0

BIG-IP AFM

- 21.0.0, 17.5.1, 17.5.0, 17.1.3, 17.1.2, 17.1.1, 17.1.0, 17.0.0

BIG-IP PEM

- 21.0.0, 17.5.1, 17.5.0, 17.1.3, 17.1.2, 17.1.1, 17.1.0, 17.0.0

BIG-IP DNS

- 21.0.0, 17.5.1, 17.5.0, 17.1.3, 17.1.2, 17.1.1, 17.1.0, 17.0.0

BIG-IP ASM

- 21.0.0, 17.5.1, 17.5.0, 17.1.3, 17.1.2, 17.1.1, 17.1.0, 17.0.0

Configuring Layer 3 nPath Routing

Overview: Layer 3 nPath routing

Using Layer 3 nPath routing, you can load balance traffic over a routed topology in your data

center. In this deployment, the server sends its responses directly back to the client, even when

the servers, and any intermediate routers, are on different networks. This routing method uses IP

encapsulation to create a uni-directional outbound tunnel from the server pool to the server.

You can also override the encapsulation for a specified pool member, and either remove that

pool member from any encapsulation or specify a different encapsulation protocol. The available

encapsulation protocols are IPIP and GRE.

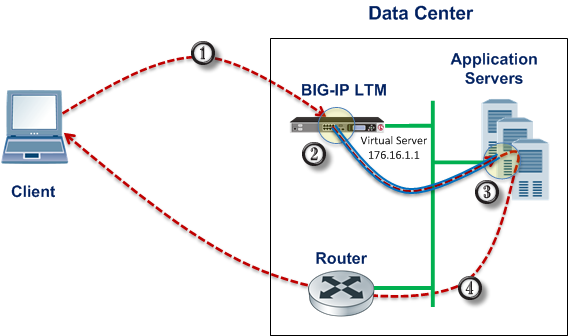

Example of a Layer 3 routing configuration

This illustration shows the path of a packet in a deployment that uses Layer 3 nPath routing

through a tunnel.

- The client sends traffic to a Fast L4 virtual server.

- The pool encapsulates the packet and sends it through a tunnel to the server.

- The server removes the encapsulation header and returns the packet to the network.

- The target application receives the original packet, processes it, and responds directly to the client.

Configuring Layer 3

nPath routing using tmsh

Before performing this procedure, determine the IP address of the loopback interface

for each server in the server pool.

Use Layer 3 nPath routing to provide direct server

return for traffic in a routed topology in your data center.

- On the BIG-IP system, start a console session.

- Create a server pool with an encapsulation profile.tmsh create ltm pool npath_ipip_pool profiles add { ipip } members add { 10.7.1.7:any 10.7.1.8:any 10.7.1.9:any }This command creates the poolnpath_ipip_pool, which has three members that specify all services:10.7.1.7:any,10.7.1.8:any, and10.7.1.9:any, and applies IPIP encapsulation to outbound traffic.

- Create a profile that disables hardware acceleration.tmsh create ltm profile fastl4 fastl4_npath pva-acceleration noneThis command disables the Packet Velocity ASIC acceleration mode in the new Fast L4 profile namedfastl4_npath.

- Create a virtual server that has address translation disabled, and includes the pool with the encapsulation profile.tmsh create ltm virtual npath_udp destination 176.16.1.1:any pool npath_ipip_pool profiles add { fastl4_npath } translate-address disabled ip-protocol udpThis command creates a virtual server namednpath_udpthat intercepts all UDP traffic, does not use address translation, and does not use hardware acceleration. The destination address176.16.1.1matches the IP address of the loopback interface on each server.

These implementation steps configure only the

BIG-IP device in a deployment example. To configure other devices in your network for L3

nPath routing, consult the device manufacturer's documentation for setting up direct

server return (DSR) for each device.

Configuring a Layer

3 nPath monitor using tmsh

Before you begin this task, configure a server pool with an encapsulation profile, such

as

npath_ipip_pool

.You can create a custom monitor to provide server

health checks of encapsulated tunnel traffic. Setting a variable in the

db

component causes the monitor traffic to be encapsulated.- Start at the Traffic Management Shell (tmsh).

- Create a transparent health monitor with the destination IP address of the virtual server that includes the pool with the encapsulation profile.tmsh create ltm monitor udp npath_udp_monitor transparent enabled destination 176.16.1.1:*This command creates a transparent monitor for UDP traffic with the destination IP address 176.16.1.1, and the port supplied by the pool member.

- Associate the health monitor with the pool that has the encapsulation profile.tmsh modify pool npath_ipip_pool monitor npath_udp_monitorThis command specifies that the BIG-IP system monitors UDP traffic to the poolnpath_ipip_pool.

- Enable the variable in thedbcomponent that causes the monitor traffic to be encapsulated.tmsh modify sys db tm.monitorencap value enableThis command specifies that the monitor traffic is encapsulated.

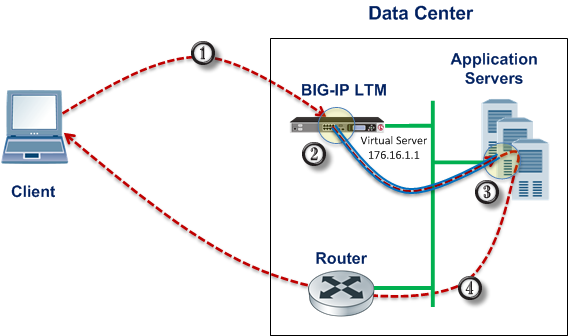

Layer 3 nPath routing example

The following illustration shows one example of an L3 nPath routing configuration in a network.

Example of a Layer 3 routing configuration

The following examples show the configuration code that supports the illustration.

Client configuration:

# ifconfig eth0 inet 10.102.45.10 netmask 255.255.255.0 up # route add –net 10.0.0.0 netmask 255.0.0.0 gw 10.102.45.1

BIG-IP device configuration:

# - create node pointing to server's ethernet address # ltm node 10.102.4.10 { # address 10.102.4.10 # } # - create transparent monitor # ltm monitor tcp t.ipip { # defaults-from tcp # destination 10.102.3.202:http # interval 5 # time-until-up 0 # timeout 16 # transparent enabled # } # - create pool with ipip profile # ltm pool ipip.pool { # members { # 10.102.4.10:any { - real server's ip address # address 10.102.4.10 # } # } # monitor t.ipip - transparent monitor # profiles { # ipip # } # } # - create FastL4 profile with PVA disabled # ltm profile fastl4 fastL4.ipip { # app-service none # pva-acceleration none # } # - create FastL4 virtual with custom FastL4 profile from previous step # ltm virtual test_virtual { # destination 10.102.3.202:any - server's loopback address # ip-protocol tcp # mask 255.255.255.255 # pool ipip.pool - pool with ipip profile # profiles { # fastL4.ipip { } - custom fastL4 profile # } # translate-address disabled - translate address disabled # translate-port disabled # vlans-disabled # }

Linux DSR server configuration:

# modprobe ipip # ifconfig tunl0 10.102.4.10 netmask 255.255.255.0 up # ifconfig lo:0 10.102.3.202 netmask 255.255.255.255 -arp up # echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore # echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce # echo 0 >/proc/sys/net/ipv4/conf/tunl0/rp_filter