Applies To:

Show Versions

BIG-IP AAM

- 14.0.1, 14.0.0

BIG-IP APM

- 14.0.1, 14.0.0

BIG-IP Analytics

- 14.0.1, 14.0.0

BIG-IP Link Controller

- 14.0.1, 14.0.0

BIG-IP LTM

- 14.0.1, 14.0.0

BIG-IP PEM

- 14.0.1, 14.0.0

BIG-IP AFM

- 14.0.1, 14.0.0

BIG-IP DNS

- 14.0.1, 14.0.0

BIG-IP ASM

- 14.0.1, 14.0.0

Collecting and Viewing TCP Statistics

Overview: Viewing TCP statistics

You can set up the BIG-IP® system to gather information about TCP flows to better understand what is happening on your networks. The system can collect TCP statistics locally, remotely, or both. You can view these statistics in graphical charts, and use the information for troubleshooting and improving network performance.

The statistic reports for both TCP and FastL4 show details about RTT (round trip time), goodput, connections, and packets. For TCP, you can also view statistics for delay analysis. You can save the reports or email them to others.

Task Summary

Creating a TCP Analytics profile

Viewing TCP statistics

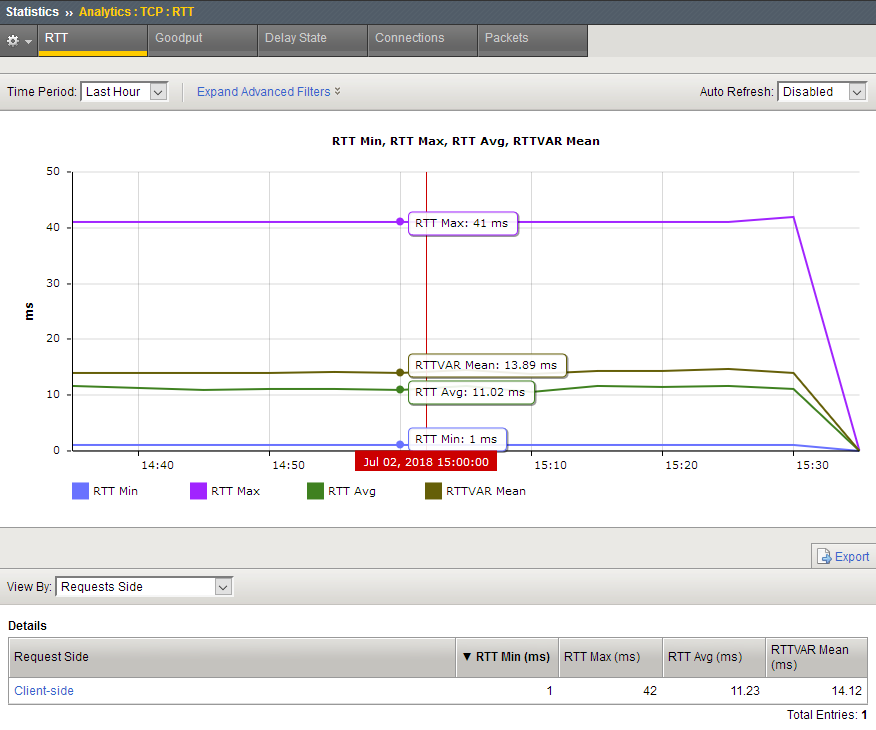

Sample TCP RTT statistics

This figure is a sample TCP statistics chart showing round trip times (RTT), or how long it takes for outgoing TCP packets on the client side to be answered by the server. When you hover over the chart, it shows the RTT minimum, RTT maximum, RTT average (mean), and the RTTVAR mean values. You can use these statistics to help gauge application performance.

Sample TCP RTT statistics chart

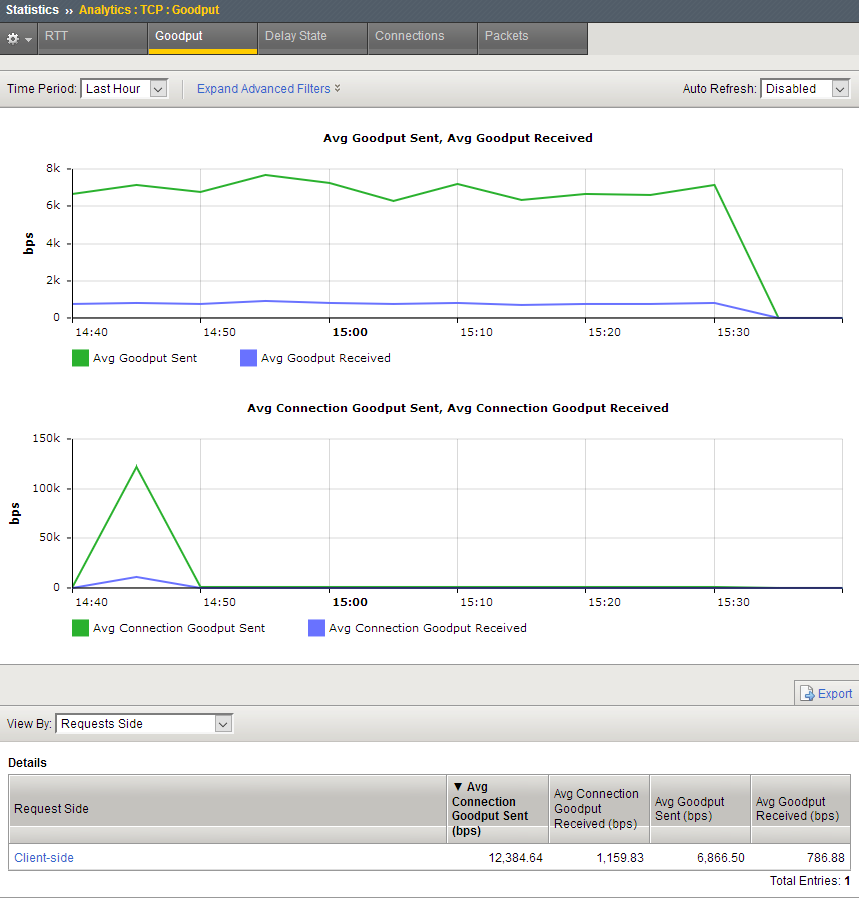

Sample TCP goodput statistics

This figure is a sample TCP statistics report showing goodput sent and received values from the client side. Goodput shows throughput at the application level over a period of time. You can use these statistics to understand network performance.

Sample TCP Goodput statistics chart

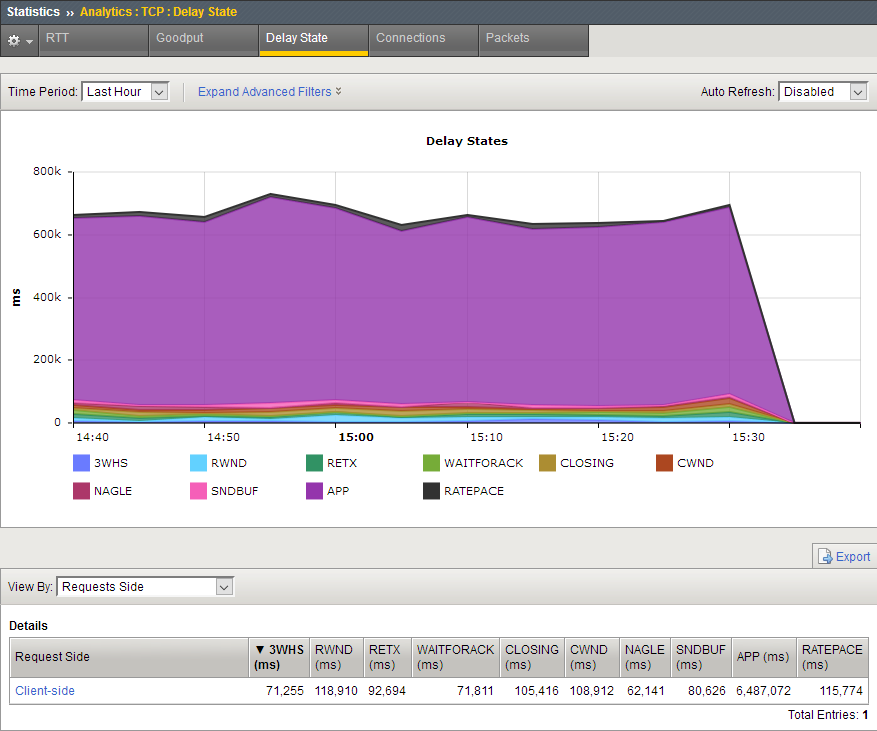

Sample TCP delay state statistics

This sample TCP statistics report shows the causes of delay states. Here the primary delay state cause is application latency either on the client, or server side.

Sample TCP Delay State statistics chart

The delay states, described in the following table, are color coded in the chart. You can hover over the part of the chart you are interested in to display the delay states and their values. These states apply to outgoing data. Analytics picks the first listed state that matches the current situation.

| State | Description and What to Do |

|---|---|

| 3WHS | 3-way handshake that starts a TCP connection. Analytics will accrue time in this state only if it can estimate the round-trip-time of the SYN or SYN-ACK that it sent. |

| RETX | Retransmission. TCP is resending data and/or waiting for acknowledgment of those retransmissions. This may indicate lossy links in the data path, or overly aggressive congestion control (for example, a profile with Slow Start disabled or improperly set Packet Loss Ignore settings). Activating rate-pace in the TCP profile may also help. |

| CLOSING | The BIG-IP® system has received acknowledgment of all data, sent the FIN, and is awaiting acknowledgement of the FIN. If the FIN goes out with the last chunk of data, you might not see this state at all. If there is a major issue on the client side, the issue may be that the servers are configured for keepalive (to not send FIN with their last data). |

| WAITFORACK | The BIG-IP system has sent all available data and is awaiting an ACK. If this state is prevalent, it could be a short connection, or possibly either the upper layers or the server are forcing TCP to frequently pause to accept new data. |

| APP | The BIG-IP system has successfully delivered all available data. There is a delay either at the client, the server, or in the layers above TCP on the BIG-IP system. |

| RWND | Receive-window limited. The remote host’s flow-control is forcing the BIG-IP system to idle. |

| SNDBUF | The local send buffer settings limit the data in flight below the observed bandwidth/delay product. Correctable by increasing the Send Buffer size in the TCP profile. |

| CWND | Congestion-window limited. The TCP congestion window is holding available data. This is usually a legitimate response to the bandwidth-delay product and congestion on the packet path. In some cases, it might be a poor response to non-congestion packet loss (fixable using the Packet Loss Ignore profile options) or inaccurate data in the congestion metrics cache (addressable by disabling Congestion Metrics Cache, the ROUTE::clear iRule, or the tmsh command delete net cmetrics dest-addr <addr>). |

| NAGLE | TCP is holding sub-MSS size packets due to Nagle’s algorithm. If the NAGLE state shows up frequently, disable Nagle's algorithm in the TCP profile. |

| RATEPACE | TCP is delaying transmission of packets due to rate pacing. This has no impact on achievable throughput, and no action is required. |

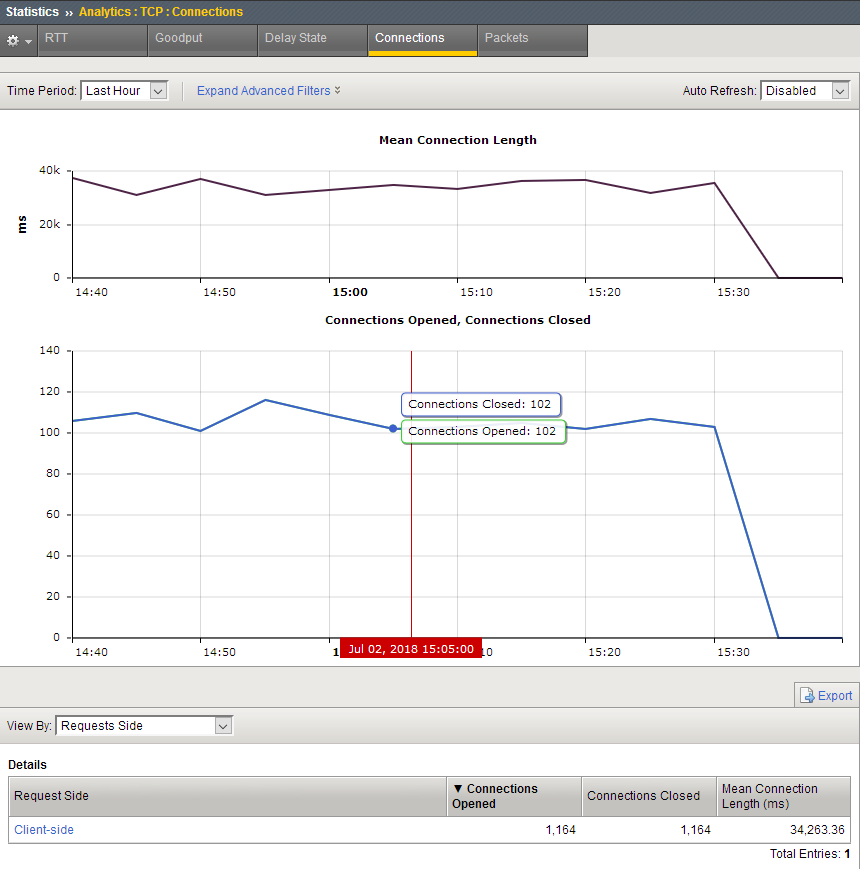

Sample TCP connection statistics

This sample TCP connection report shows the average connection length in milliseconds, and the number of connections opened and closed during the last hour. If new connections are outpacing closed ones, that means the system may be unsustainably loaded.

Sample TCP Connections statistics chart

You can change the information that is displayed in the chart and the Details table by changing the View By setting. For example, you can view by Countries + Regions to see where the connections are originating.

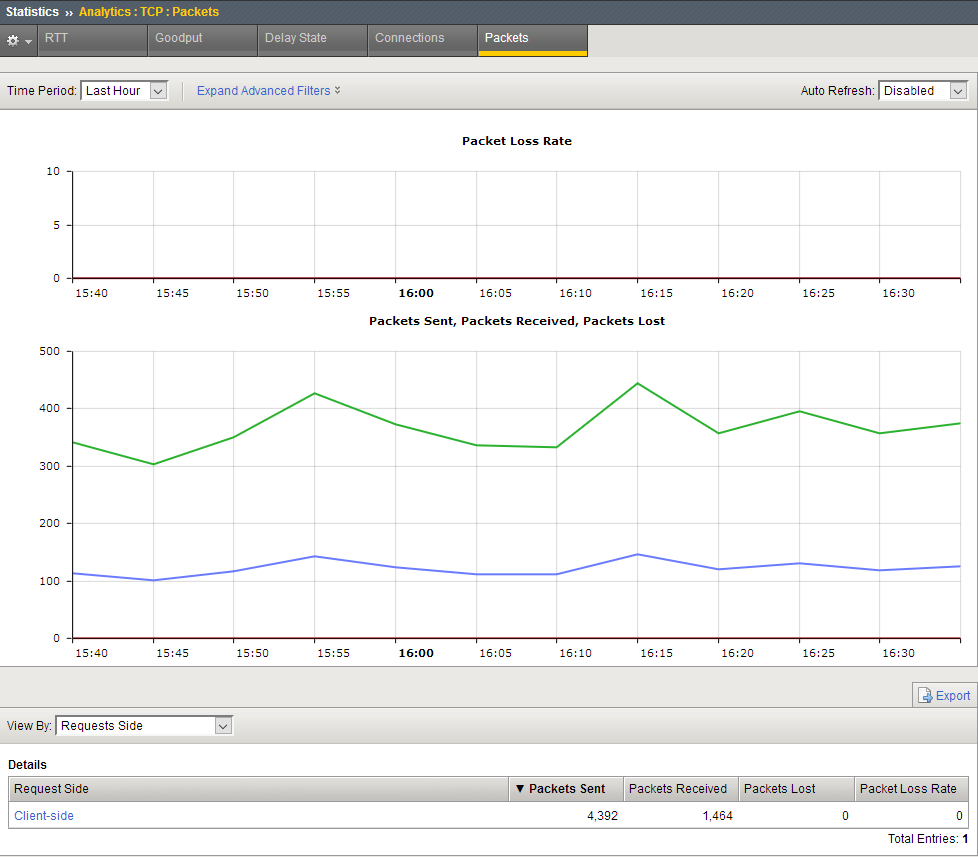

Sample TCP packets statistics

This sample TCP packets report shows the number of packets lost, sent, and received during the last hour. Packet loss is typically caused by network congestion, and can impact application performance. In this example there are is no packet loss.

Sample TCP Packets statistics chart

You can drill down into the statistics. For example, on systems with multiple virtual servers, applications, or subnet addresses, you can investigate specific entities that might be having trouble. If users are having difficulties with an application, from the View By list, select Applications. In the Detail list, click the application to zoom in on the statistics for that application only.

Sample iRule for TCP Analytics

You can create a TCP Analytics profile that uses an iRule to collect the statistics. In the profile, for Statistics Collection, do not select either Client Side or Server Side. Let the iRule handle it.

For example:

# start collection for one subnet only.

when CLIENT_ACCEPTED {

if [IP::addr [IP::client_addr]/8 equals 10.0.0.0] {

TCP::analytics enable

}

}

when HTTP_REQUEST {

# must check subnet again to avoid starting for all

# connections

if [IP::addr [IP::client_addr]/8 equals 10.0.0.0] {

# make stats queryable by URI

TCP::analytics key "[HTTP::uri]"

}

}

For more information about iRules®, refer to devcentral.f5.com.