Applies To:

Show Versions

BIG-IP AAM

- 12.1.0

BIG-IP APM

- 12.1.0

BIG-IP LTM

- 12.1.0

BIG-IP DNS

- 12.1.0

BIG-IP ASM

- 12.1.0

Additional Network Considerations

Network separation of Layer 2 and Layer 3 objects

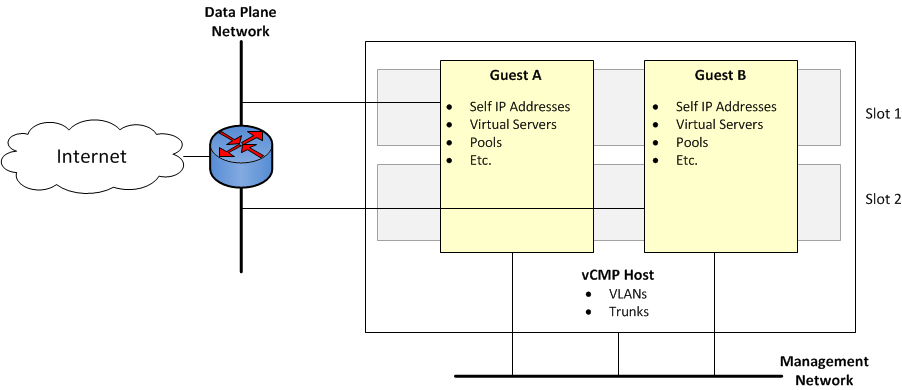

On a vCMP system, you must configure BIG-IP® Layer 2 objects, such as trunks and VLANs, on the vCMP host and then selectively decide which of these objects you want each guest to inherit. Typically, to ensure that each guest's data plane traffic is securely isolated from other guests, the host administrator creates a separate VLAN for each guest to use. Other objects such as self IP addresses, virtual servers, pools, and profiles are configured on the guest by each guest administrator. With this separation of Layer 2 from Layer 3 objects, application traffic is targeted directly to the relevant guest, further allowing each guest to function as a fully-independent BIG-IP® device.

The following illustration shows the separation of Layer 2 objects from higher-layer objects on the vCMP system:

Isolation of network objects on the vCMP system

About the VLAN publishing strategy

For both host and guest administrators, it is important to understand certain concepts about VLAN configuration on a vCMP system:

- VLAN subscription from host to guest

- System behavior when a host and a guest VLAN have duplicate names or tags

Overview of VLAN subscription

As a vCMP® host administrator, when you create or modify a guest, you typically publish one or more host-based VLANs to the guest. When you publish a host-based VLAN to a guest, you are granting a subscription to the guest for use of that VLAN configuration, with the VLAN's underlying Layer 2 resources.

When you publish a VLAN to a guest, if there is no existing VLAN within the guest with the same name or tag as the host-based VLAN, the vCMP system automatically creates, on the guest, a configuration for the published VLAN.

If you modify a guest's properties to remove a VLAN publication from a guest, you are removing the guest's subscription to that host-based VLAN. However, the actual VLAN configuration that the host created within the guest during initial VLAN publication to the guest remains there for the guest to use. In this case, any changes that a host administrator might make to that VLAN are not propagated to the guest.

In general, VLANs that appear within a guest can be either host-based VLANs currently published to the guest, host-based VLANs that were once but are no longer published to the guest, or VLANs that the guest administrator manually created within the guest.

This example shows the effect of publishing a host-based VLAN to, and then deleting the VLAN from, a guest that initially had no VLANs.

# Within guest G1, show that the guest has no VLANs configured:

[root@G1:/S1-green-P:Active:Standalone] config # tmsh list net vlan

# From the host, publish VLAN v1024 to guest G1:

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh modify vcmp guest G1 vlans add { v1024 }

# Within guest G1, list all VLANs:

[root@G1:/S1-green-P:Active:Standalone] config # tmsh list net vlan

net vlan v1024 {

if-index 96

tag 1024

}

# On the host, delete the host-based VLAN publication from guest G1:

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh modify vcmp guest G1 vlans del { v1024 }

# Notice that the host-based VLAN still exists within the guest:

[root@G1:/S1-green-P:Active:Standalone] config # tmsh list net vlan

vlan v1024 {

if-index 96

tag 1024

}

About VLANs with identical tags and different names

Sometimes a host administrator might publish a VLAN to a guest, but the guest administrator has already created, or later creates, a VLAN with a different name but the same VLAN tag. In this case, the guest VLAN always overrides the host VLAN. The VLAN can still exist on the host (for other guests to subscribe to), but it is the guest VLAN that is used.

Whenever host and guest VLANs have different names but the same tags, traffic flows successfully across the host from the guest because the VLAN tag alignment is correct. That is, when the tags match, the underlying Layer 2 infrastructure of the VLANs matches, thereby enabling the host to reach the guest.

The example here shows the tmsh command sequence for creating two separate VLANs with different names and the same tag, and the resulting successful traffic flow.

# On the host, create a VLAN with a unique name but with a tag matching that of a guest VLAN VLAN_A:

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh create net vlan VLAN_B tag 1000

# On the host, publish the host VLAN to the guest:

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh modify vcmp guest guest1 vlans add { VLAN_B }

# Within the guest, show that the guest still has its own VLAN only, and not the VLAN published from the host:

[root@G1:/S1-green-P:Active:Standalone] config # tmsh list net vlan all

net vlan VLAN_A {

if-index 192

tag 1000

}

# On the guest, create a self IP address for VLAN_A:

[root@G1:/S1-green-P:Active:Standalone] config # tmsh create net self 10.1.1.1/24 vlan VLAN_A

# On the host, delete the self IP address on VLAN_A (this VLAN also exists on the guest) and re-create the self IP address on VLAN_B (this VLAN has the same tag as VLAN_A):

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh delete net self 10.1.1.2/24

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh create net self 10.1.1.2/24 vlan VLAN_B

# From the host, open a connection to the guest, and notice that because the two VLANs have the same tags, the connection suceeds:

[root@host_210:/S1-green-P:Active:Standalone] config # ping -c2 10.1.1.1

PING 10.1.1.1 (10.1.1.1) 56(84) bytes of data.

64 bytes from 10.1.1.1: icmp_seq=1 ttl=255 time=3.35 ms

64 bytes from 10.1.1.1: icmp_seq=2 ttl=255 time=0.989 ms

--- 10.1.1.1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.989/2.170/3.352/1.182 ms

About VLANs with identical names and different tags

Sometimes a host administrator might publish a VLAN to a guest, but the guest administrator has already created, or later creates, a VLAN with the same name but with a different VLAN tag. In this case, the guest VLAN always overrides the host VLAN. The VLAN can still exist on the host (for other guests to subscribe to), but it is the guest VLAN that is used.

Whenever host and guest VLANs have the same names but different tags, traffic cannot flow between the identically-named VLANs at Layer 2. That is, when the tags do not match, the underlying Layer 2 infrastructure of the VLANs does not match, thereby preventing the host from reaching the guest.

The example here shows the tmsh command sequence for creating two separate VLANs with the same names and different tags, and the resulting traffic flow issue.

# While logged into the guest, create a VLAN:

[root@G1:/S1-green-P:Active:Standalone] config # tmsh create net vlan VLAN_A tag 1000

# Show that no VLANs exist on the host:

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh list net vlan all

[root@host_210:/S1-green-P:Active:Standalone] config #

# On the host, create a VLAN with the same name as the guest VLAN but with a unique tag on the host:

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh create net vlan VLAN_A tag 1001

# Publish the host VLAN to the guest:

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh modify vcmp guest guest1 vlans add { VLAN_A }

# Within the guest, show that the guest still has its own VLAN only, and not the VLAN published from the host:

[root@G1:/S1-green-P:Active:Standalone] config # tmsh list net vlan all

net vlan VLAN_A {

if-index 192

tag 1000

}

# Within the guest, create a self IP address for the VLAN:

[root@G1:/S1-green-P:Active:Standalone] config # tmsh create net self 10.1.1.1/24 vlan VLAN_A

# On the host, create a self IP address for the identically-named VLAN:

[root@host_210:/S1-green-P:Active:Standalone] config # tmsh create net self 10.1.1.2/24 vlan VLAN_A

# From the host, open a connection to the guest, and notice that because the two VLANs have different tags, the connection fails:

[root@host_210:/S1-green-P:Active:Standalone] config # ping -c2 10.1.1.1

PING 10.1.1.1 (10.1.1.1) 56(84) bytes of data.

From 10.1.1.2 icmp_seq=1 Destination Host Unreachable

From 10.1.1.2 icmp_seq=2 Destination Host Unreachable

--- 10.1.1.1 ping statistics ---

2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 3000ms

pipe 2

Solution for tag discrepancy between host and guest VLANs

When a host-based VLAN and a guest-created VLAN have identical names but different VLAN tags, traffic flow at Layer 2 is impeded between host and guest. Fortunately, you can resolve this issue by performing these tasks, in the sequence shown:

- Within the guest, delete the relevant VLAN from within the guest.

- On the host, remove the VLAN publication from the guest.

- On the host, modify the tag of the host-based VLAN.

- On the host, publish the VLAN to the guest.

- Within the guest, view the VLAN from within the guest.

Deleting the VLAN within the guest

Perform this task when you want to delete a VLAN from within a vCMP guest.

Removing the VLAN publication on the guest

Modifying the tag of the host-based VLAN

Perform this task to change a VLAN tag on a vCMP host to ensure that the tag matches that of a VLAN on a guest.

Publishing the VLAN to the guest

Viewing the new VLAN within the guest

Perform this task to verify that the VLAN that the host published to a guest appears on the guest, with the correct tag.

Interface assignment for vCMP guests

The virtualized nature of vCMP® guests abstracts many underlying hardware dependencies, which means that there is no direct relationship between guest interfaces and the physical interfaces assigned to VLANs on the vCMP host.

Rather than configuring any interfaces on a guest, a guest administrator simply creates a self IP address within the guest, specifying one of the VLANs that the host administrator previously configured on the host and assigned to the guest during guest creation.

As host administrator, if you want to limit the guest to using specific physical interfaces, you simply change the physical interface assignments on the VLANs that you assign to that guest.

Management IP addresses for bridged guests

When a system administrator initially configured the VIPRION system, the administrator specified a primary cluster management IP address for the system as a whole, as well as a separate management IP address for each slot in the VIPRION cluster. On a vCMP system, because each guest functions like an independent VIPRION cluster, a vCMP host or guest administrator assigns a similar set of IP addresses for each guest:

- A cluster IP address

- This is the unique IP address that a host administrator assigns to a guest during guest creation. The cluster IP address is the management IP address that a guest administrator uses to log in to a guest to provision, configure, and manage BIG-IP®modules. This IP address is required for each guest.

- One or more cluster member IP addresses

- These are unique IP addresses that a guest administrator assigns to the virtual machines (VMs) in the guest's cluster, for high-availability purposes. For example, if a guest on a four-slot system is configured to run on four slots, then the guest administrator must create an IP address for each of those four slots. These addresses are management addresses, and although optional for a standalone system, these addresses are required for a device service clustering (DSC®) configuration. In this case, a second set of unique cluster member IP addresses must be configured on the peer system. These IP addresses are the addresses that the guest administrator will specify when configuring failover for each guest that is a member of a Sync-Failover device group.

As an example, suppose you have a pair of VIPRION 2400 chassis, where the two guests on one chassis also reside on the other chassis to form a redundant configuration. In this case, as host administrator, you must assign a total of four cluster IP addresses (one per guest for four guests).

If each guest spans four slots, then each guest administrator must then assign four cluster member IP addresses per guest per chassis, for a total of eight. The result is a total of 20 unique vCMP-related management IP addresses for the full redundant pair of chassis containing two guests per chassis (four cluster IP addresses and 16 cluster member IP addresses).