Applies To:

Show Versions

BIG-IP AAM

- 13.0.1, 13.0.0

BIG-IP APM

- 13.0.1, 13.0.0

BIG-IP LTM

- 13.0.1, 13.0.0

BIG-IP DNS

- 13.0.1, 13.0.0

BIG-IP ASM

- 13.0.1, 13.0.0

What is flexible resource allocation?

Flexible resource allocation is a built-in vCMP® feature that allows vCMP host administrators to optimize the use of available system resources. Flexible resource allocation gives you the ability to configure the vCMP host to allocate a different amount of CPU and memory to each guest through core allocation, based on the needs of the specific BIG-IP® modules provisioned within a guest.

When you create each guest, you specify the number of logical cores that you want the host to allocate to the guest, and you identify the specific slots that you want the host to assign to the guest. Configuring these settings determines the total amount of CPU and memory that the host allocates to the guest. With flexible allocation, you can customize CPU and memory allocation in granular ways that meet the specific resource needs of each individual guest.

Resource allocation planning

When you create a vCMP® guest, you must decide the amount of dedicated resource, in the form of CPU and memory, that you want the vCMP host to allocate to the guest. You can allocate a different amount of resources to each guest on the system.

Prerequisite hardware considerations

Blade models vary in terms of how many cores the blade provides and how much memory each core contains. Also variable is the maximum number of guests that each blade model supports. For example, a single B2100 blade provides eight cores and approximately 3 gigabytes (GB) of memory per core, and supports a maximum of four guests.

Before you can determine the number of cores to allocate to a guest and the number of slots to assign to a guest, you should understand:

- The total number of cores that the blade model provides

- The amount of memory that each blade model provides

- The maximum number of guests that the blade model supports

By understanding these metrics, you ensure that the total amount of resource you allocate to guests is aligned with the amount of resource that your blade model supports.

For specific information on the resources that each blade model provides, see the vCMP® guest memory/CPU core allocation matrix on the AskF5™ Knowledge Base at http://support.f5.com.

Understanding guest resource requirements

Before you create vCMP® guests and allocate system resources to them, you need to determine the specific CPU and memory needs of each guest. You can then decide how many cores to allocate and slots to assign to a guest, factoring in the resource capacity of your blade model.

To determine the CPU and memory resource needs, you must know:

- The number of guests you need to create

- The specific BIG-IP® modules you need to provision within each guest

- The combined memory requirement of all BIG-IP modules within each guest

About core allocation for a guest

When you create a guest on the vCMP® system, you must specify the total number of cores that you want the host to allocate to the guest based on the guest's total resource needs. Each core provides some amount of virtual CPU (vCPU) and a fixed amount of memory.

Memory allocation

You should specify enough cores to satisfy the combined memory requirements of all BIG-IP® modules that you provision within the guest. When you deploy the guest, the host allocates this number of cores to every slot on which the guest runs, regardless of the number of slots you have assigned to the guest.

It is important to understand that the total amount of memory available to a guest is only as much as the host has allocated to each slot. If you instruct the host to allocate a total of two cores per slot for the guest (for example, 6 GB of memory depending on blade model) and you configure the guest to run on four slots, the host does not aggregate the 6 GB of memory on each slot to provide 24 GB of memory for the guest. Instead, the guest still has a total of 6 GB of memory available. This is because blades in a chassis operate as a cluster of independent devices, which ensures that if the number of blades for the guest is reduced for any reason, the remaining blades still have the required memory available to process the guest traffic.

If a blade suddenly becomes unavailable, the total traffic processing resource for guests on that blade is reduced and the host must redistribute the load on that slot to the remaining assigned slots. This increases the number of connections that each remaining blade must process and therefore the amount of memory actually used per blade. The increased percentage of memory used per blade compared to the amount of memory allocated could cause swapping and degraded performance. You can prevent this result by making sure you allocate enough cores to the guest, per slot, when you create the guest.

vCPU allocation

Unlike memory allocation, the total allocation of vCPUs for a guest is the sum total of vCPUs allocated to the guest on all blades in the chassis. This means that if you remove a blade from the chassis, total vCPU allocation for a guest decreases by the number of vCPUs allocated to the guest on that blade.

About core fragmentation when deploying a guest

Could not allocate vCMP guest guest-name because slot 1: fragmented resourcesThis message is caused by core fragmentation. Fragmentation occurs when a single-CPU guest consumes only one of the two hyperthreads that make up a core, and you have deployed more than one single-CPU guest. Guests that consume a single hyperthread of a core each leave the remaining hyperthread available to other guests, but not in a contiguous way that other guests can use. That is, most guests are multi-CPU guests and therefore require that each core allocated be a full core consisting of two hyperthreads. The vCMP system cannot allocate a core to a guest by combining two non-contiguous (that is, fragemented) hyperthreads.

The best way to fix the problem is to set one of the single-CPU guests back to the Configured state and then re-deploy it. In this case, the vCMP system will most likely allocate a hyperthread from a different core to that guest, freeing up a full, two-hyperthread core for the guest that triggered the error message.

Formula for host memory allocation to a guest

You can use a formula to confirm that the cores you plan to allocate to a specific guest are sufficient, given the guest's total memory requirements:

(total_GB_memory_per_blade - 3 GB) x (cores_per_slot_per_guest / total_cores_per_blade) = amount of guest memory allocation from host

The variables in this formula are defined as follows:

- total_GB_memory_per_blade

- The total amount of memory in gigabytes that your specific blade model provides (for all guests combined).

- cores_per_slot_per_guest

- The estimated number of cores needed to provide the total amount of memory that the guest requires.

- total_cores_per_blade

- The total number of cores that your specific blade model provides (for all guests combined).

For example, if you have a VIPRION® 2150 blade, which provides approximately 32 GB memory through a maximum of eight cores, and you estimate that the guest will need two cores to satisfy the guest's total memory requirement of 8 GB, the formula looks as follows:

(32 GB - 3 GB) x (2 cores / 8 cores) = 7.25 GB memory that the host will allocate to the guest per slot

In this case, the formula shows that two cores will not provide sufficient memory for the guest. If you specify four cores per slot instead of two, the formula shows that the guest will have sufficient memory:

(32 GB - 3 GB) x (4 cores / 8 cores) = 14.5 GB memory that the host will allocate to the guest per slot

Note that except for single-core guests, the host always allocates cores in increments of two . For example, for B2150 blade models, the host allocates cores in increments of 2, 4, and 8.

Once you use this formula for each of the guests you plan to create on a slot, you can create your guests so that the combined memory allocation for all guests on a slot does not exceed the total amount of memory that the blade model provides.

About slot assignment for a guest

On the vCMP® system, the host assigns some number of slots to each guest based on information you provide when you initially create the guest. The key information that you provide for slot assignment is the maximum and minimum number of slots that a host can allocate to the guest, as well as the specific slots on which the guest is allowed to run. With this information, the host determines the number of slots and the specific slot numbers to assign to each guest.

As a best practice, you should configure every guest so that the guest can span all slots in the cluster whenever possible. The more slots that the host can assign to a guest, the lighter the load is on each blade (that is, the fewer the number of connections that each blade must process for that guest).

About single-core guests

On platforms with hard drives, the vCMP® host always allocates cores on a slot for a guest in increments of two cores. In the case of blades with solid-state drives, however, the host can allocate a single core to a guest, but only for a guest that requires one core only; the host does not allocate any other odd number of cores per slot for a guest (such as three, five, or seven cores).

Because a single-core guest has a relatively small amount of CPU and memory allocated to it, F5 Networks supports only these products or product combinations for a single-core guest:

- BIG-IP® Local Traffic Manager™ (LTM®) only

- BIG-IP® Local Traffic Manager™ (LTM®) and BIG-IP® DNS (previously Global Traffic Manager) only

- BIG-IP® DNS (previously Global Traffic Manager) standalone only

Scalability considerations

When managing a guest's slot assignment, or when removing a blade from a slot assigned to a guest, there are a few key concepts to consider.

About initial slot assignment

When you create a vCMP® guest, the number of slots that you initially allow the guest to run on determines the maximum total resource allocation possible for that guest, even if you add blades later. For example, in a four-slot VIPRION® chassis that contains two blades, if you allow a guest to run on two slots only and you later add a third blade, the guest continues to run on two slots and does not automatically expand to acquire additional resource from the third blade. However, if you initially allow the guest to run on all slots in the cluster, the guest will initially run on the two existing blades but will expand to run on the third slot, acquiring additional traffic processing capacity, if you add another blade.

Because each connection causes some amount of memory use, the fewer the connections that the blade is processing, the lower the percentage of memory that is used on the blade compared to the total amount of memory allocated on that slot for the guest. Configuring each guest to span as many slots as possible reduces the chance that memory use will exceed the available memory on a blade when that blade must suddenly process additional connections.

If you do not follow the best practice of instructing the host to assign as many slots as possible for a guest, you should at least allow the guest to run on enough slots to account for an increase in load per blade if the number of blades is reduced for any reason.

In general, F5 Networks strongly recommends that when you create a guest, you assign the maximum number of available slots to the guest to ensure that as few additional connections as possible are redistributed to each blade, therefore resulting in as little increase in memory use on each blade as possible.

About changing slot assignments

At any time, you can intentionally increase or decrease the number of slots a guest runs on explicitly by re-configuring the number of slots that you initially assigned to the guest. Note that you can do this while a guest is processing traffic, to either increase the guest's resource allocation or to reclaim host resources.

When you increase the number of slots that a guest is assigned to, the host attempts to assign the guest to those additional slots. The host first chooses those slots with the greatest number of available cores. The change is accepted as long as the guest is still assigned to at least as many slots as dictated by its Minimum Number of Slotsvalue. If the additional number of slots specified is not currently available, the host waits until those additional slots become available and then assigns the guest to these slots until the guest is assigned to the desired total number of slots. If the guest is currently in a deployed state, VMs are automatically created on the additional slots.

When you decrease the number of slots that a guest is assigned to, the host removes the guest from the most populated slots until the guest is assigned to the correct number of slots. The guest's VMs on the removed slots are deleted, although the virtual disks remain on those slots for reassignment later to another guest. Note that the number of slots that you assign to a guest can never be less than the minimum number of slots configured for that guest.

Effect of blade removal on a guest

If a blade suddenly becomes unavailable, the total traffic processing resource for guests on that blade is reduced and the host must redistribute the load on that slot to the remaining assigned slots. This increases the number of connections that each remaining blade must process and therefore the amount of memory used per blade. Fortunately, when you you instruct the host to allocate some amount of memory to the guest, the host allocates that amount of memory to every slot in the guest's cluster.

Be aware, however, that if a blade goes offline so that the number of connections per blade increases, the increased percentage of memory used per blade compared to the amount of memory allocated could cause swapping and degraded performance. You can prevent this result by making sure you allocate enough cores to the guest, per slot, when you create the guest.

Example of blade removal and memory use

A blade going offline increases the amount of memory being used on the remaining blades. The following example helps to explain this concept.

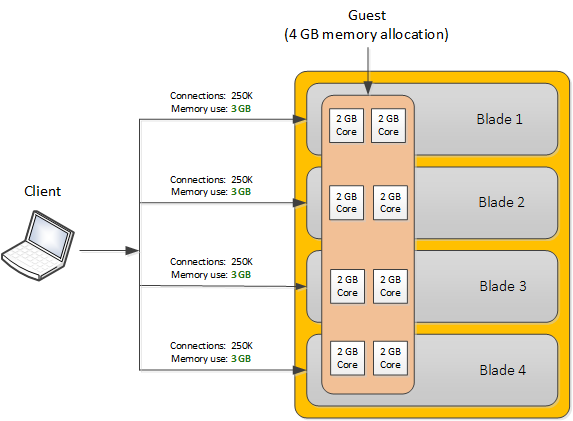

Suppose you have a guest spanning four slots that process 1,000,000 connections combined, where each slot is processing a quarter of the connections to the guest. Notice that the host administrator has allocated 4 GB of memory to the guest, and there is a current memory use of 3 GB for every 250,000 connections.

All blades are functional with normal memory use per blade

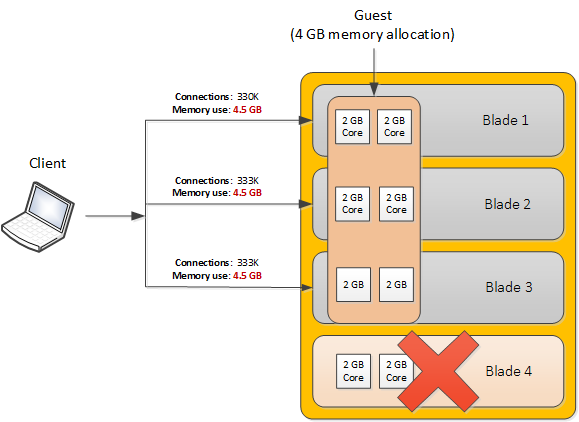

Now suppose a blade goes offline. In this case, each remaining blade must now process a third of the connections (333,333), which might increase memory use per blade to 4.5 GB (for example).

The following illustration shows that when a blade goes offline, memory use can exceed the 4 GB available on each blade due to the increase in number of connections per blade:

An offline blade causes memory use to approach memory allocation per blade

As you can see in the example, the increase in percentage of memory use per blade could be avoided by allocating four cores per slot instead of two, so that 8 GB of memory is available per blade. This removes any risk of performance degradation when blade loss occurs.

Effect of blade re-insertion on a guest

When you remove a blade from the chassis, the host remembers which guests were allocated to that slot. If you then re-insert a blade into that slot, the host automatically allocates cores from that blade to the guests that were previously assigned to that slot.

Whenever the host assigns guests to a newly-inserted blade, those guests that are below their Minimum Number of Slots threshold are given priority; that is, the host assigns those guests to the slot before guests that are already assigned to at least as many slots as their Minimum Number of Slots value. Note that this is the only time when a guest is allowed to be assigned to fewer slots than specified by its Minimum Number of Slots value.

Network throughput for guests

To manage network throughput for a vCMP® guest, you should understand the throughput capacity of your blade type, as well as the throughput limit you want to apply to each guest:

- Throughput capacity per blade

- Each blade type on a VIPRION ®system has a total throughput capacity, which defines the combined upper limit on throughput for guests on a blade. For example, on a B2100 blade with one single-slot guest, the guest can process up to 40Gbps (with ePVA enabled). If the single-slot guest needs to process more than 40Gbps, you can expand the guest to run on more slots.

- Throughput limits per guest

- Throughput requirements for a guest are typically lower than the throughput capacity of the blades on which the guest runs. Consequently, you can define a specific network throughput limit for each guest. When vCMP is provisioned on the system, you define a guest's throughput limit by logging in to the vCMP host and creating a rate shaping object known as a Single Rate Three Color Marker (srTCM) Policer. You then assign the policer to one or more guests when you create or modify the guests. It is important that the srTCM values that you assign to a guest do not exceed the combined throughput capacity of the blades pertaining to that guest.

SSL resource allocation

On certain models, you can control the way that the vCMP system allocates high-performance SSL hardware resources to vCMP guests. Specifically, you can configure one of three SSL modes for each guest:

- Shared mode

- This mode causes the guest to share its consumption of SSL hardware resource with other guests that are also in Shared mode. In this case, guests with the most need for SSL resources consume more of the total resource available. This is the default SSL mode.

- Dedicated mode

- This mode dedicates a fixed amount of SSL hardware resource to a guest. When you configure this option for a guest, the amount of resource that the system allocates to the guest is based on the guest's core allocation. In Dedicated mode, the guest is guaranteed a fixed amount of resource and this amount is not affected by the amount of resource that other guests consume.

- None

- This option prevents a guest from consuming any SSL hardware resources. This option also prevents the guest from consuming compression hardware resources.

About compression resource allocation

On blade models that include compression hardware processors, the vCMP® host allocates an equal share of the hardware compression resource among all guests on the system, in a round robin fashion.

Additionally, on certain blade models, the vCMP host automatically disables the allocation of compression hardware resources to a guest whenever you also disable the allocation of SSL hardware resources to that guest.

Guest states and resource allocation

As a vCMP® host administrator, you can control when the system allocates or de-allocates system resources to a guest. You can do this at any time, by setting a guest to one of three states: Configured, Provisioned, or Deployed. These states affect resource allocation in these ways:

- Configured

- This is the initial (and default) state for a newly-created guest. In this state, the guest is not running, and no resources are allocated. If you change a guest from another state to the Configured state, the vCMP host does not delete any virtual disks that were previously attached to that guest; instead, the guest's virtual disks persist on the system. The host does, however, automatically de-allocate other resources such as CPU and memory. When the guest is in the Configured state, you cannot configure the BIG-IP® modules that are licensed to run within the guest; instead, you must set the guest to the Deployed state to provision and configure the BIG-IP modules within the guest.

- Provisioned

- When you change a guest state from Configured to Provisioned, the vCMP host allocates system resources to the guest (CPU, memory, and any unallocated virtual disks). If the guest is new, the host creates new virtual disks for the guest and installs the selected ISO image on them. A guest does not run while in the Provisioned state. When you change a guest state from Deployed to Provisioned, the host shuts down the guest but retains its current resource allocation.

- Deployed

- When you change a guest to the Deployed state, the vCMP host activates the guest virtual machines (VMs), and the guest administrator can then provision and configure the BIG-IP modules within the guest. For a guest in this state, the vCMP host starts and maintains a VM on each slot for which the guest has resources allocated. If you are a host administrator and you reconfigure the properties of a guest after its initial deployment, the host immediately propagates the changes to all of the guest VMs and also propagates the list of allowed VLANs.